Apple recently shared some exciting news about powerful new features designed to make their devices even more accessible later this year. These tools aim to help people of all abilities use their iPhones, iPads, Macs, Apple Watches, and even Apple Vision Pro more easily, independently, and productively.

Making technology work for everyone is incredibly important, and these updates show how Apple is using clever technology like machine learning to create brand new ways for people to interact with their devices and the world around them. Let’s break down some of the most significant new features announced.

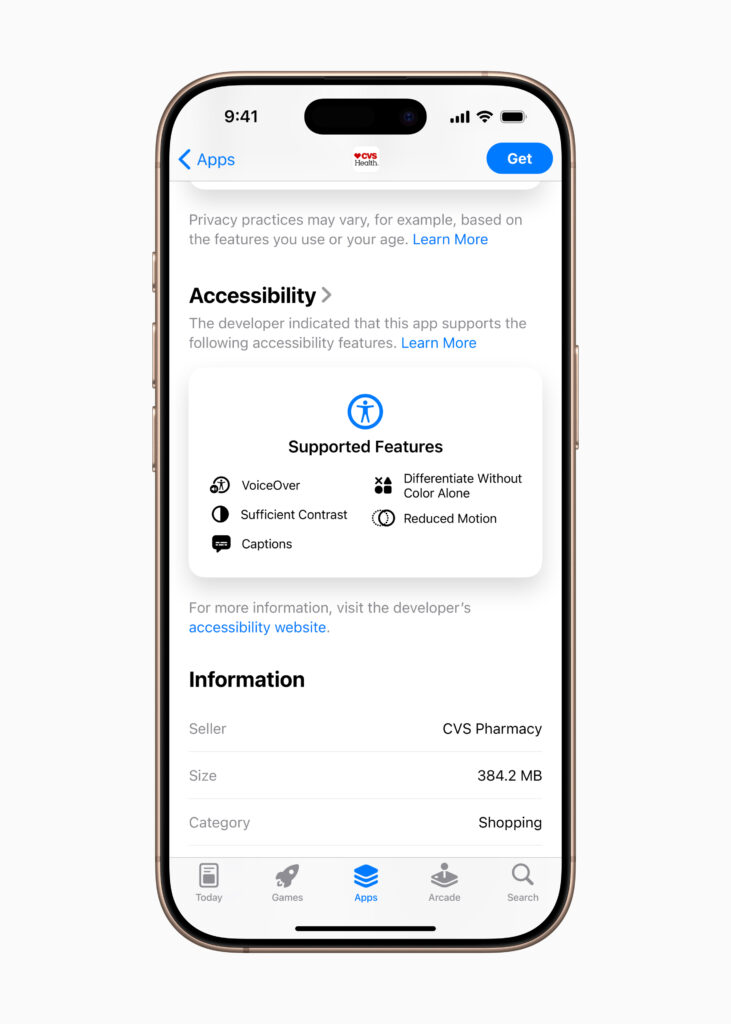

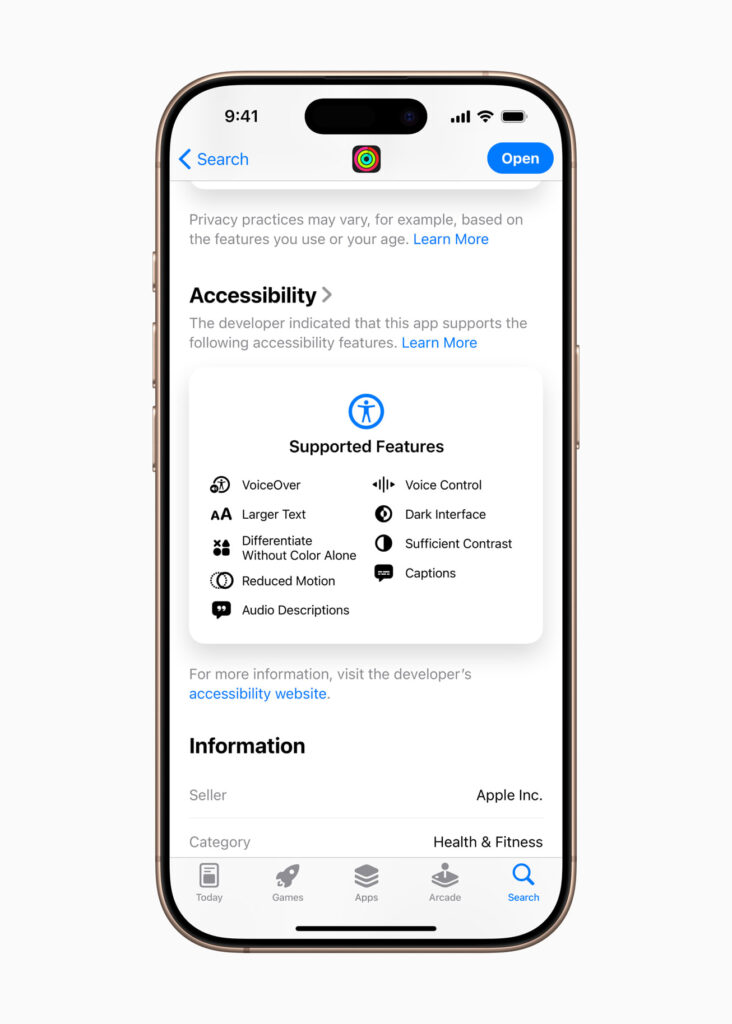

Knowing What You Can Use: Accessibility Nutrition Labels on the App Store

Imagine shopping for groceries and seeing a label that tells you if the food meets your dietary needs or has allergens. Apple is doing something similar for apps! “Accessibility Nutrition Labels” are coming to the App Store.

Before you download an app or game, you’ll see a clear section showing which accessibility features it supports – things like if it works well with screen readers (VoiceOver for blind users), supports larger text, uses high contrast colors for better visibility, offers reduced motion for sensitive users, provides captions for videos, and lots more.

- How this helps: This is a game-changer for avoiding frustration. You can quickly see if an app is designed to work for you before you even download it, saving time, effort, and disappointment. It helps you confidently choose apps that fit your specific needs and use them independently.

- Who benefits: This is incredibly helpful for anyone who uses accessibility features, especially people who are blind or have low vision, are deaf or hard of hearing, or need adjustments for reading or interaction. Developers also benefit by being able to clearly show users how accessible their apps are.

(Click to enlarge)

Bringing the Physical World to Your Screen: Magnifier for Mac

Many people use the Magnifier app on their iPhone or iPad to zoom in on small print or distant objects in the real world using their phone’s camera. Now, this powerful tool is coming to your Mac! The new “Magnifier app for Mac” lets you use your computer’s camera (or even your iPhone camera with a feature called Continuity Camera, or another connected camera) to get a super close-up view of anything in front of it. Think of it like a high-tech magnifying glass connected to your big Mac screen.

You can zoom in on a book on your desk, a receipt, a whiteboard across the room, or even physical objects. You can also adjust settings like brightness, contrast, and colors to make things easier to see, and even view multiple things at once – maybe a presentation on screen and a document on your desk side-by-side. It also works with the new Accessibility Reader (more on that below) to make physical text easier to read.

- How this helps: This makes interacting with the physical world much easier and more independent if you have low vision. You can read documents right on your desk, follow along with presentations, or examine objects without needing a separate handheld magnifier or struggling to see. It brings the physical world right into your computer workspace.

- Who benefits: This feature is a major benefit for users with low vision.

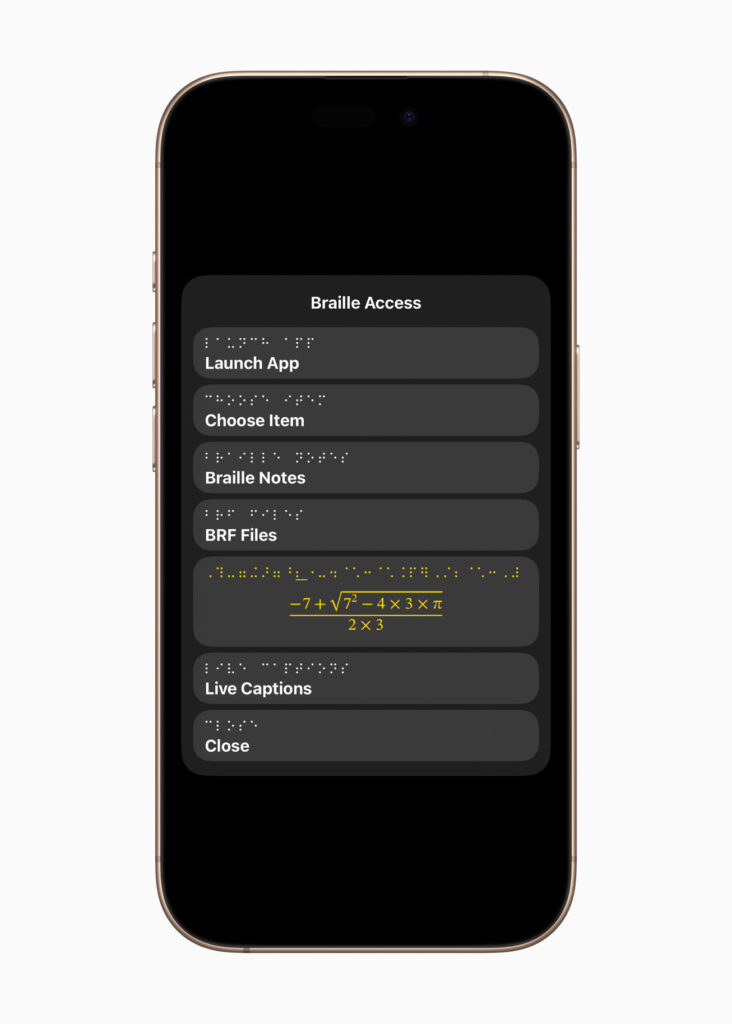

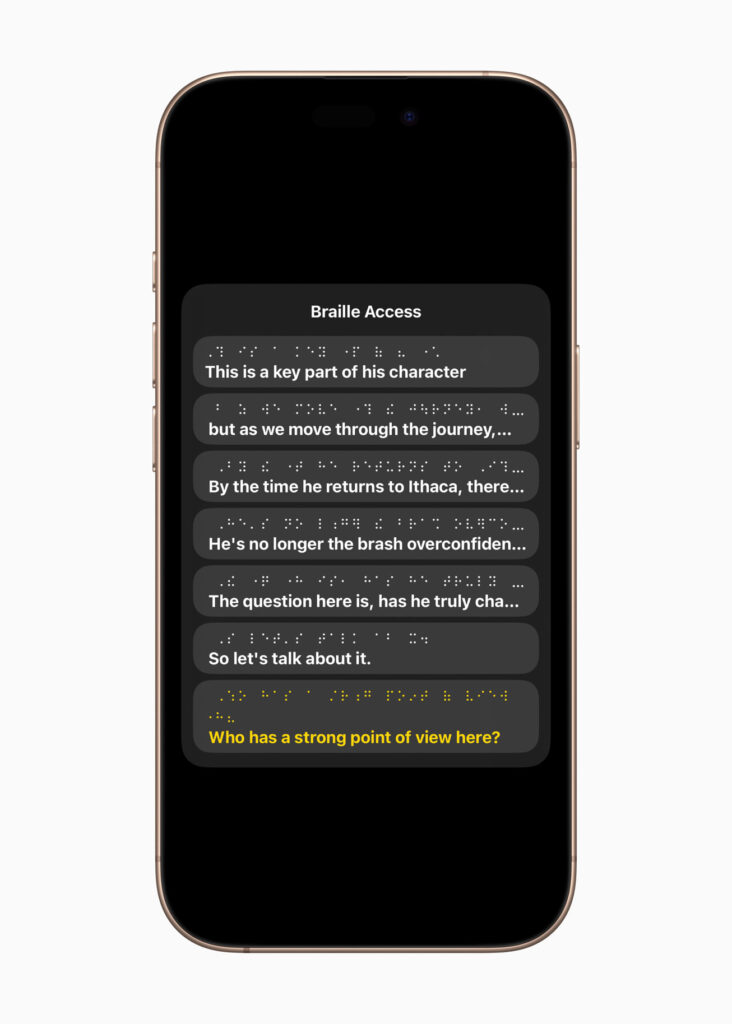

A Breakthrough for Braille Users: Braille Access

This is a truly groundbreaking feature! “Braille Access” transforms your Apple device (iPhone, iPad, Mac, or Apple Vision Pro) into a complete, full-featured braille note-taking tool. Instead of needing a separate, dedicated braille note-taker device (which can be very expensive), you can now use braille directly on your Apple device.

This feature is deeply integrated, allowing you to use braille input (on-screen or with a connected braille display) to type notes, do math problems using a special braille code called Nemeth Braille, open and read common braille files, and even control your apps directly. A really cool part is being able to see “Live Captions” (real-time text transcriptions of spoken words) appear directly on your connected braille display during conversations.

(Click to enlarge)

- How this helps: This opens up incredible new levels of independence and productivity for braille users. It integrates braille seamlessly into your daily digital life, making tasks like note-taking in class or meetings, doing homework, reading books, and accessing information much more fluid and potentially more affordable compared to relying solely on traditional braille note-takers. Seeing live conversations in braille format is a powerful new way to participate.

- Who benefits: This feature is specifically designed for users who are blind and rely on braille for reading, writing, and communication, including students and professionals.

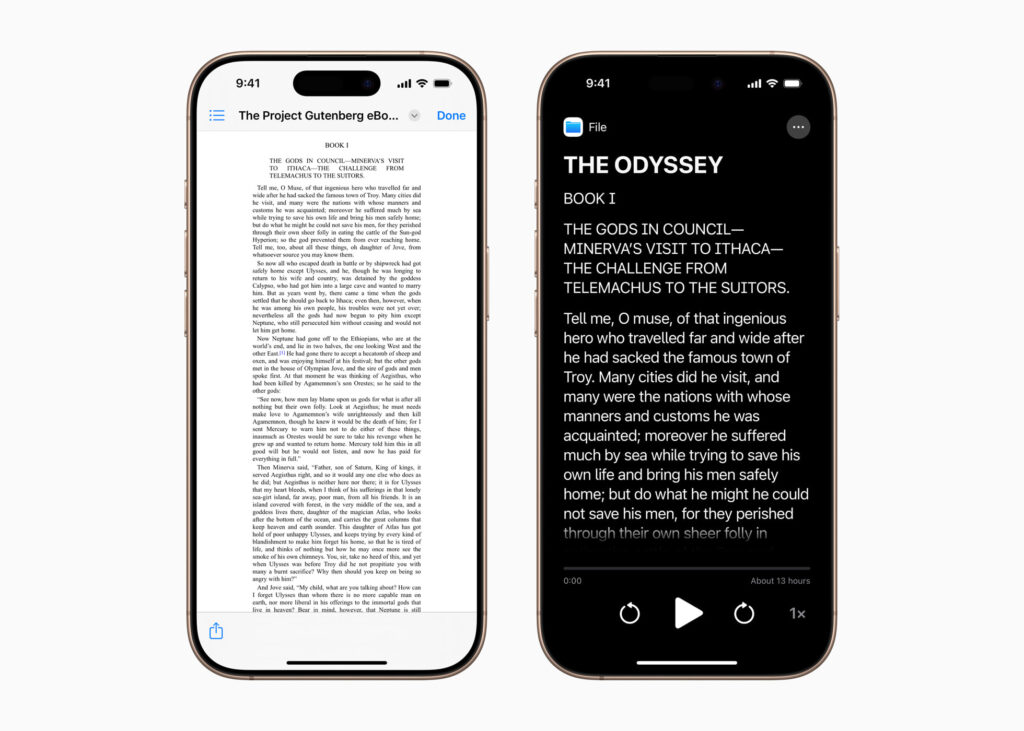

Making Reading Easier, Anywhere: Accessibility Reader

Reading on screens can be tough for many reasons, whether it’s due to small fonts, cluttered layouts, or specific reading difficulties. “Accessibility Reader” is a new reading mode available across Apple devices (iPhone, iPad, Mac, and Apple Vision Pro) designed to make text easier to read for everyone, especially if you have challenges like dyslexia or low vision.

It strips away distractions and lets you customize how text appears – you have extensive options to change the font, size, color, line spacing, and even have the text spoken aloud to you (using Spoken Content). You can use it in any app, and it’s built into the Magnifier app too, meaning you can even use it to make physical text (like in books or on menus) easier to read after you’ve captured it with Magnifier.

(Click to enlarge)

- How this helps: This puts the control of the reading experience firmly in your hands. You can adjust the text to your specific needs, making reading faster, less tiring, and more enjoyable. Being able to apply these settings anywhere you read, both on-screen and in the physical world, is a huge advantage for staying productive and independent.

- Who benefits: This is excellent for people with dyslexia, low vision, or anyone who struggles with standard text readability on screens.

Seeing What’s Said on Your Wrist: Live Captions on Apple Watch

Apple’s “Live Listen” feature already lets you use your iPhone as a microphone to send sounds directly to your compatible headphones or hearing aids, helping you hear conversations or sounds better from a distance. Now, they’ve added “Live Captions” directly to your Apple Watch!

While Live Listen is active on your iPhone, you’ll see a real-time text transcription of what the iPhone hears, appearing moment-by-moment right on your watch face. You can also use your watch to control the Live Listen session – start or stop it, or jump back slightly if you missed something – all without needing to grab your phone.

(Click to enlarge)

- How this helps: This is a game-changer for discreetly following conversations, lectures, meetings, or presentations from a distance. You can simply glance at your wrist to read the captions, giving you a new level of participation, understanding, and independence in various settings without having to constantly handle or look at your phone.

- Who benefits: This feature is specifically for users who are deaf or hard of hearing.

Seeing More with Spatial Computing: Enhanced Accessibility on Apple Vision Pro

Apple Vision Pro, the new spatial computer you wear, is also getting some powerful accessibility updates that use its advanced cameras and technology. Updates to features like “Zoom” let you magnify not just digital content, but the physical world around you that the Vision Pro sees. “Live Recognition” uses smart computer vision (a form of AI) to describe your surroundings, identify objects, and read text for you (if you use VoiceOver). Plus, a new tool for developers means approved accessibility apps (like Be My Eyes, which connects sighted volunteers with blind users for visual assistance) can now access the Vision Pro camera to provide live, person-to-person help with visual interpretation.

- How this helps: This makes navigating and understanding your environment much easier and more independent when using Vision Pro. You get hands-free visual assistance, whether from AI descriptions or connecting with a human helper, opening up new possibilities for interacting with the world around you while experiencing spatial computing.

- Who benefits: These updates are particularly helpful for users who are blind or have low vision and are using or considering using Apple Vision Pro.

Other Helpful Tools: Head Tracking & Shortcuts

Apple is also adding other useful features, including “Head Tracking,” which will allow you to control your iPhone or iPad using just movements of your head. This provides a new hands-free way to navigate and interact with your device. There are also new “Shortcuts,” like “Hold That Thought” to quickly capture ideas so you don’t forget them, and an “Accessibility Assistant” shortcut on Vision Pro to help you find accessibility features that best suit your needs.

- How this helps: Head Tracking offers a new input method for people who have difficulty using their hands. The new Shortcuts help streamline tasks and make finding accessibility settings easier.

- Who benefits: Head Tracking is great for users with mobility impairments affecting their hands. Shortcuts can benefit anyone looking to automate tasks or get quick assistance with finding features, but the Accessibility Assistant is particularly helpful for new or existing users exploring accessibility options.

What This All Means: More Independence and New Possibilities

These new features represent a significant step forward in making technology truly usable for a wider range of people. They aren’t just minor tweaks; they are powerful tools that leverage Apple’s hardware and software working together to create new possibilities for independence and productivity.

Consider the Braille Access feature, for example. In the past, a braille user needing a portable device for notes, calculations, and reading would often need a separate, dedicated braille note-taker that could cost thousands of dollars and might not connect easily with their other devices. Now, their iPhone or iPad can become that note-taker, seamlessly integrated with their apps, cloud storage, and communication tools. This levels the playing field and offers unprecedented flexibility and affordability.

Similarly, Magnifier for Mac and Accessibility Reader working together allow someone with low vision to not only magnify physical text but also transform it into a format they can read comfortably or have read aloud, removing barriers to accessing printed information like books, menus, or documents in ways that weren’t easily possible before on a computer. Live Captions on Apple Watch offers a subtle, real-time way for deaf or hard of hearing individuals to follow conversations in public or professional settings, fostering greater inclusion.

While companies like Google and Microsoft also offer many valuable accessibility features – Google has tools like TalkBack, Live Transcribe, and magnification, and Microsoft offers Narrator, Magnifier, and immersive reading modes – Apple’s strength is often in the deep integration across its ecosystem. These new features demonstrate how Apple designs accessibility directly into the core of its operating systems and hardware, allowing powerful features like Braille Access or the combined Magnifier and Reader to work smoothly across different devices and in many different apps.

Overall, these announcements highlight a future where technology adapts more powerfully to the individual, rather than requiring the individual to adapt to the technology. They open doors to greater participation, learning, work, and connection for people with disabilities, empowering them to live more independent and productive lives.

Statement of AI Assistance:

This blog post was generated with the assistance of Google Gemini. Gemini was provided a detailed prompt to analyze the provided text of the Apple press release announcing new accessibility features, identify the key features and details within that text, and synthesize the information into the structured blog post format, explaining each feature in simple language, identifying the target audience and benefits, and drafting the conclusion based on the specific requirements provided. Is this a good use of Generative AI? Why or why not? Let me know in the comments below!

The post Easier for Everyone: A Look at Apple’s Upcoming Powerful New Accessibility Features (2025) appeared first on Assistive Technology Blog.